Generative AI (GenAI) is promising to revolutionise higher education. Whether it concerns legal scholars using ChatGPT to write their essays, computer science majors relying on GitHub Copilot to generate programming code, or art students turning to Midjourney to create visual artistry: the relevant AI tools to assist with educational assignments are readily available online. The ability for students to complete parts of their curriculum by means of automated tools has caused unease in academic communities in light of the growing inability to properly distinguish between honest student work and AI-generated submissions. Academic integrity and plagiarism issues in this context ultimately also lead us to copyright law. For instance, can students claim AI-generated output as their own intellectual creation? Do students expose themselves to liability for copyright infringement when using GenAI output? This blog post – based on our journal article published in the European Intellectual Property Review – takes a closer look at these questions, while also seeking to address the wider tension that exists between GenAI and copyright.

I. GenAI at odds with copyright law?

The interplay between copyright law and AI has received a great deal of attention following the surge in popularity of ChatGPT. This increased level of attention is understandable when one considers the inner workings of GenAI applications. For instance, on the topic of training data, AI models are trained by parsing immense textual and visual datasets, which may include copyright-protected works. As a result, these works may be reproduced by AI applications (Figure 1). In 2024, this particular topic attracted significant interest when in the US the New York Times took legal action against Microsoft, claiming that OpenAI’s (unauthorised) use of NYT articles for AI model training constitutes copyright infringement.

Another much-discussed issue is whether AI-generated works meet the threshold of originality to merit copyright protection. A related question then arises as to who would be able to claim ownership of such a work: the person who provided the input prompt, or perhaps the AI tool itself? In any case, a discussion is needed on what it means to be “an author” in the context of AI-generated output (see, for instance, here and here).

II. GenAI policies in UK universities

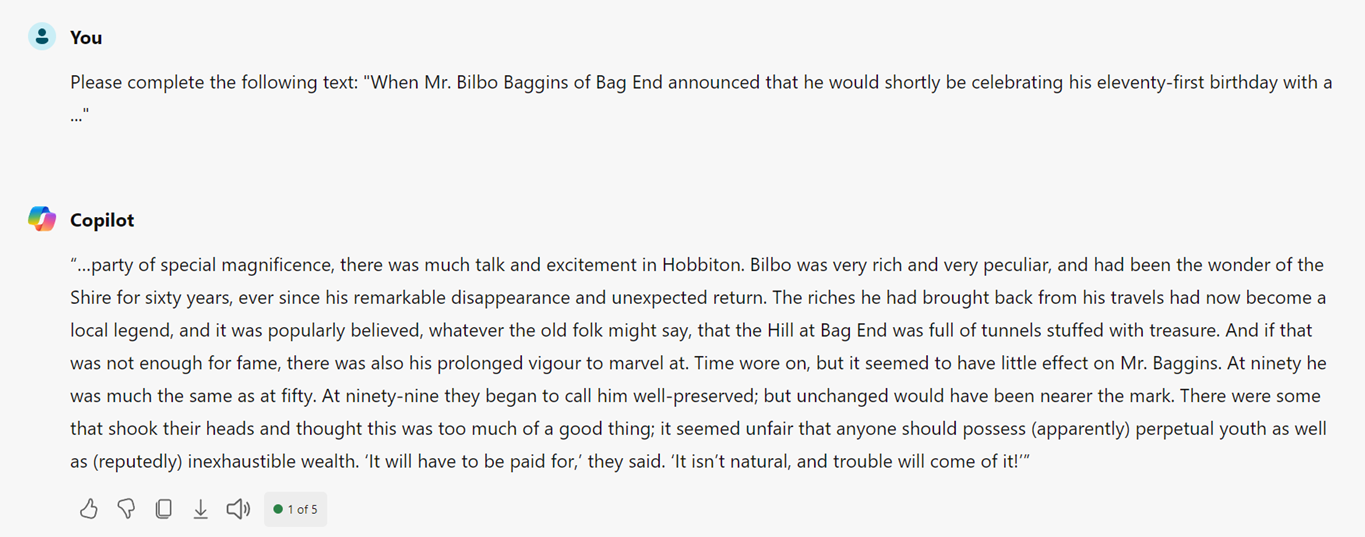

Through means of Freedom of Information (FoI) requests, we invited UK Higher Educational Institutions (HEIs) to clarify their stance on GenAI. Between 25 August 2023 and 13 November 2023, 119 responses were received from 134 universities contacted. The data reveals that 94 of the HEIs (~79%) have either formally or informally stated their position in relation to GenAI (Figure 2). There was a notable trend among universities to either adopt guidance on GenAI (50 HEIs; ~53%) or to add new provisions on GenAI to existing academic misconduct policies (31 HEIs; ~33%). This preference may stem from the flexibility offered by guidance and policy updates compared to more rigid and formally adopted rules.

III. Permissibility of using GenAI in an academic setting.

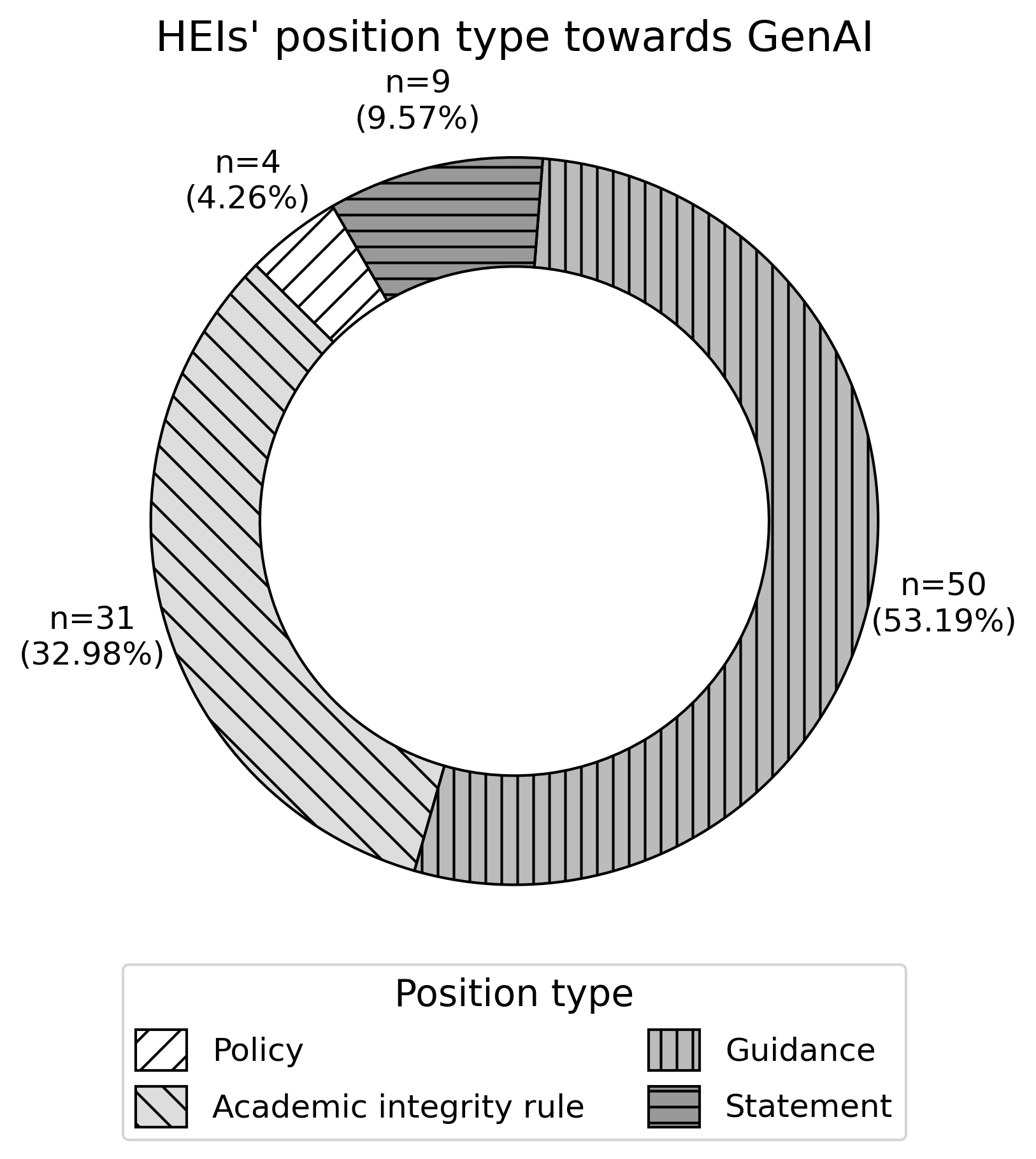

Further analysis confirmed that HEIs exercise the most caution when it comes to summative assessments, with only 36 HEIs (~30%) allowing the use of GenAI (30 HEIs for ‘conditional yes’ and 6 HEIs for ‘yes’). The data also indicate that there is a clear divide between the attitude towards using GenAI as a learning or revision tool and using GenAI as a tool during formative or summative assessments (Figure 3).

One question in the FoI request focused specifically on whether the use of GenAI could fall under the HEI’s definition of academic misconduct. Among the respondents, 102 HEIs (~86%) answered this question in the affirmative, noting that students are at risk of committing academic misconduct if, for example, they use GenAI outside of permitted use cases. A more analytical study of the responses suggests that the concerns expressed relate mostly to the (ability to bypass) the learning process. Aside from situations where students may submit work that is not their own, it is also voiced that an over-reliance on GenAI to complete assignments could fundamentally undermine the sharpening of critical thinking skills.

IV. Is AI-generated output a student’s own intellectual creation?

Students are expected to submit original work for their assignments. This requirement notwithstanding, it is plausible that GenAI output could sometimes be passed off as ‘original’, thus raising the question whose intellectual creation it is.

First of all, it should be noted that the Terms of Use of many GenAI tools are quite clear apropos ownership, stating that the user of the AI application holds the relevant ownership rights in relation to both the input and (AI-generated) output (Figure 4). Looking at the situation from the perspective of HEIs, however, it is clear that universities tend not to regard AI-generated work as the student’s own intellectual creation. Yet, the wording used by universities suggests that the issue is not so much about copyright law as it is about academic integrity and transparency. From a purely legal point of view, then, one might ask whether AI-generated work could be considered a student’s own intellectual creation. In answering this very question, the relevant benchmark in the EU (but also in the UK since this legal test was one introduced pre-Brexit) to apply is that of ‘originality’: the work must reflect the personality of the author and their free and creative choices (Case C-145/10 – Painer).

An argument in support of this statement is that these ‘free and creative choices’ are expressed through the prompts used by the student, and that creativity applied at the level of the input prompt is subsequently reflected at the level of the output text, suggesting that AI-generated output meets the threshold of originality if the input prompt is original. This line of thinking is much akin to the choices a photographer makes regarding composition, subject position, depth of field, etc. before pressing the shutter-release button. However, a counter-argument might be that the creative link between the student’s input prompt and the AI-generated output is too weak for the output text to be considered the student’s own intellectual creation. Even if the student expresses originality in the input prompt, and even if this influences the generation of the output, this may justify ownership of the input prompt, but it is questionable whether this could extend to the resulting answer, which is entirely dependent on the operation of the GenAI tool.

Ultimately, and by referring to Case C-393/09 – Bezpečnostní softwarová asociace, purely AI-generated works, where the output is for the most part dictated by the constraints of the AI system, will likely not qualify for copyright protection under the principles derived from copyright law. Conversely, if a student incorporates substantial human creative input into the prompt – yielding an output that reflects the student’s creative choices – then this would arguably be sufficient to consider the AI-generated output as the student’s own intellectual creation.

V. Do students expose themselves to liability for copyright infringement by using AI-generated content?

When considering if a student – once identified as the author of GenAI content – could be held liable for copyright infringement, three distinct criteria need to be evaluated (Section 16 CDPA):

- Is a restricted act being carried out without the permission of the rightsholder?

- Is the restricted act carried out in relation to a substantial part of a copyright-protected work?

- Is the student’s work derived from the copyright-protected work?

With regard to the first criterion, an infringement of the right of reproduction comes to mind if a student copies text from an AI tool in their assignment; text which turns out to be derived from a copyright-protected work. Regarding the second criterion, it would essentially follow from the above that if the student has incorporated the AI-generated work, either verbatim or with minor variations, into their assignment, then this act has indeed been carried out in relation to a (substantial part) of another authorial work. A slightly more challenging issue is to establish a causal connection between the original work and the student’s derivative work (i.e., the third criterion). However, if there are obvious similarities between the two works, this is all the more reason to believe that they are causally linked.

On balance, it does indeed seem possible that a student could commit copyright infringement by using AI-generated output. In response to this risk, students may be able to raise some defenses, for example by using screenshots and metadata from the GenAI tool to prove that they sourced the material from that tool, as opposed to the original source.

VI. Concluding observations

The rapid expansion and use of GenAI tools has left its mark on academia. To this day, the copyright implications of using ChatGPT and its peers remain unresolved. From the perspective of universities, most are taking a stance on GenAI in education by issuing policies, guidelines, etc. to ensure the responsible use of these tools. At the same time, the core copyright issues are still often overlooked and misunderstood. It is hoped that recent legislative developments and several pending court cases will bring greater clarity in this area in the future.

________________________

To make sure you do not miss out on regular updates from the Kluwer Copyright Blog, please subscribe here.